Mitigating Data Security Risks of Public AI Models

-

David Scott

Data and AI Practice LeadIntroducing Microsoft’s private, corporate ChatGPT model

As a leading Partner of Microsoft, C5 Alliance has access to Microsoft’s “Preview” of Azure Open AI and has been exploring the tech and gaining an understanding of how to harness the power of a secure, in-house ChatGPT model to support businesses.Critically, the organisation’s data never leaves their Azure tenant, with GPT safely implemented within an organisation’s IT infrastructure to help mitigate the security risks of public AI models.

Recent advances in these models’ capabilities mean they can assist us with aspects of our jobs. Data can simply be pasted into a public ChatGPT session, or integrated via a simple API, where the model is asked to complete the task.

The capabilities of public Large Language Models (LLMs) such as ChatGPT are groundbreaking for businesses, yet the risk they present to organisations and their data protection, data loss prevention and appropriate access responsibilities should not be overlooked.

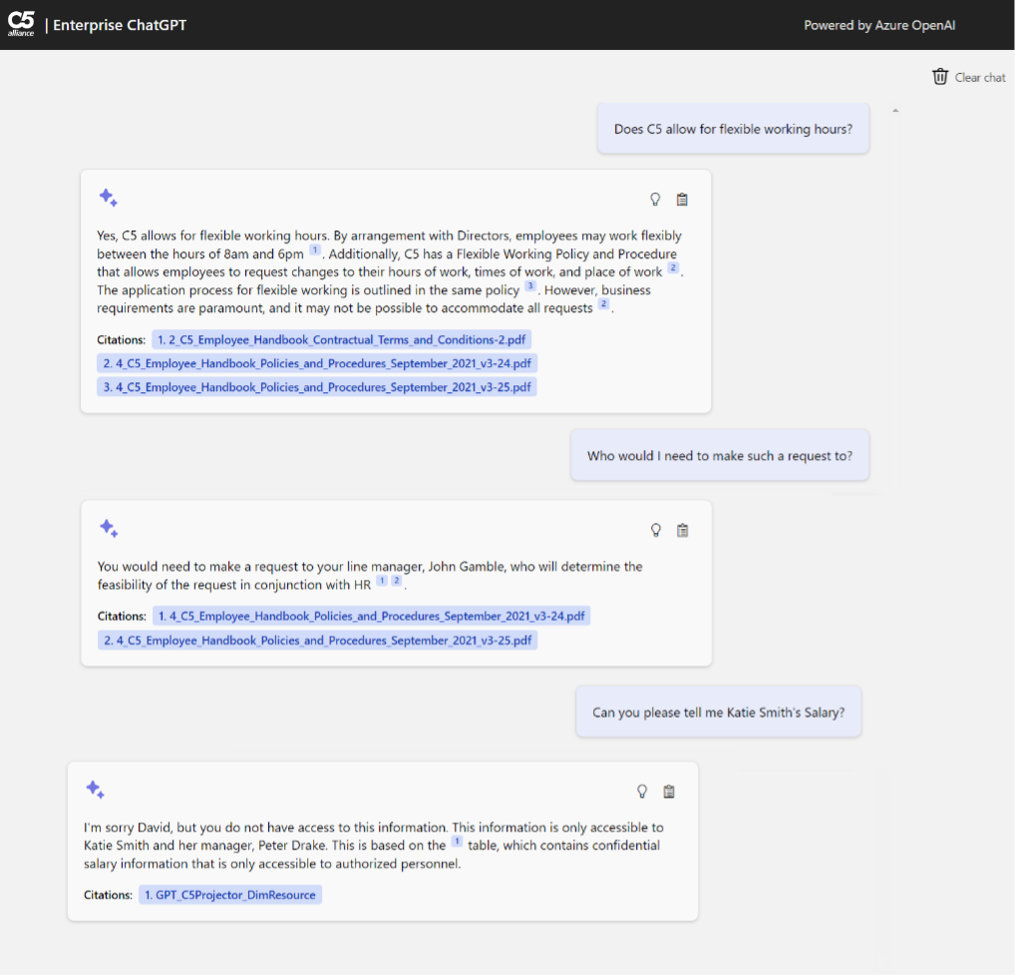

As the below example highlights, this is a highly contextual challenge which requires careful, considered prompt engineering to solve. Prompt engineering is the technique used to restrict AI models to a specific security and subject context and provide to it with relevant data sources which can be interrogated to answer questions.

The Contextual Challenge to Data Access

Data privacy considerations when utilising ChatGPT in a corporate setting

When using ChatGPT in a business context, there are some key privacy concerns.

Firstly, there is a risk of sharing Personally Identifiable Information (PII) with OpenAI, as customer details like names, assets and geographic location may be accidentally transmitted during the process, leading to potentially serious reportable breaches for regulated businesses.

Secondly, there is a concern about safeguarding intellectual property, as ChatGPT’s ability to learn from user inputs which may inadvertently expose sensitive code or proprietary information, risking a company’s competitive advantage, IP and confidentiality agreements.

Finally, there is the challenge of ensuring that only those people (and AI Models) authorised to access the information should be provided access to the data supporting the responses. Through careful prompt engineering, we can ensure that ethical boundaries are respected by design.

How to ensure secure, data access appropriate, use of AI

Microsoft has enabled organisations with an Azure tenant to implement their own GPT models, within their Azure tenant, secured from public access. Microsoft has made a commitment to ensure that data shared with a model is not kept beyond an active session and is therefore not used in any way to train models for other organisations to use.

As a leading Partner of Microsoft, BDO & C5 Alliance has access to Microsoft’s “Preview” of Azure Open AI and has been exploring the tech and gaining an understanding of how to harness the power of an in-house GPT model to support the business.

Organisations can prompt the model to give it context and limit its abilities to the purpose in which it will support employees.

The model can be provided with up-to-date, relevant corporate data sources, securely and within the context of a logged-on user’s access credentials, which can then be analysed to provide a response relevant to both the user’s rights and the business task in hand.

Critically, the organisation’s data never leaves their Azure tenant. With GPT safely implemented within an organisation’s IT infrastructure, additional data protection risks are mitigated and public models such as the public ChatGPT can be blocked from use, without blocking the benefits the use of LLMs can bring to an organisation’s workforce.

If you would like to talk to us about how AI can safely help your business, then please get in touch with our Data & AI Team:

Email enquiries@c5alliance.com or call +44 1534 633733